Contextual Object Detection with Multimodal

Large Language Models

Article: https://arxiv.org/pdf/2305.18273.pdf

Introduction

Object

detection, a crucial aspect of computer vision, involves understanding the

objects present in a scene, enabling various applications like robotics,

autonomous driving, and AR/VR systems. Recently, Multi-modal Language Models

(MLLMs) such as Flamingo, PaLM-E, and OpenAI's GPT-4 have demonstrated

remarkable abilities in vision-language tasks like image captioning and

question answering. These models enable interactive human-AI interactions,

necessitating the modeling of contextual information and relationships among

visual objects, human words, phrases, and dialogues. Therefore, there is a need

to enhance MLLMs by enabling them to locate, identify, and associate visual

objects with language inputs for effective human-AI interaction.

Top

of Form

Concepts

Multimodal

Large Language Models (MLLMs) combine language comprehension with visual

inputs, expanding the capabilities of Large Language Models (LLMs). Notable

examples include GPT series, T5, PaLM, OPT, and LLaMA. MLLMs have excelled in

vision-language tasks like image captioning and visual question answering.

However, they are limited to generating text outputs. In contrast, ContextDET,

built upon MLLMs, enables contextual object detection with bounding box

outputs.

Prompting

LLMs with Vision Experts has been explored, leveraging textual outputs from

LLMs as prompts for vision expert models like DETR and SAM. In contrast,

ContextDET employs an end-to-end training pipeline, utilizing latent features

from MLLMs as conditional inputs for a visual decoder, enabling bounding box

prediction.

Contextual

understanding in object detection involves leveraging multimodal patterns and

relationships between visual images and textual words. ContextDET leverages the

contextual understanding capability of MLLMs for object detection and proposes

new evaluation tasks like the cloze test to assess contextual understanding.

Zero-shot

object detection remains challenging, especially in real-world scenarios.

Open-Vocabulary Object Detection allows the utilization of additional

image-text pairs. While CLIP has been widely used, ContextDET demonstrates the

effectiveness of MLLMs in the open-vocabulary setting. It is not constrained by

predefined base or novel classes, and the predicted object names align with the

most contextually valid English words generated by the MLLMs.

Experiments

Reporting

the results of ContextDET on various tasks, including contextual object

detection, open-vocabulary object detection, and referring image segmentation.

In the context of contextual object detection, we focus on presenting both

quantitative and qualitative results for the cloze test setting, which poses a

significant challenge due to inferring object words from a vast human

vocabulary. Additionally, we provide qualitative results for contextual

captioning and contextual question-answering.

Regarding

implementation details, the method is implemented in PyTorch, and all models

are trained on a single machine equipped with 4 NVIDIA A100 GPUs. During

training, we apply data augmentation techniques such as random horizontal

flipping and large-scale jittering. The batch size is set to 8, and the model

is trained for 6 epochs. We utilize the AdamW optimizer with a learning rate of

1e-4 and a weight decay of 0.05.

Conclusion

ContextDET,

highlights the untapped potential of Multimodal Large Language Models (MLLMs)

in various perception tasks beyond vision-language tasks. Specifically, we

focus on the contextual object detection task, which involves predicting

precise object names and their locations in images for human-AI interaction.

However, due to the high annotation cost of associating object words with

bounding boxes, we had to use less training data compared to previous MLLM

papers, which may have impacted our final performance. To address this, future

research could explore the use of semi-supervised or weakly-supervised learning

techniques to reduce annotation costs.

Furthermore,

while MLLMs demonstrate contextual understanding abilities, there are other

unexplored capabilities that can be leveraged for downstream tasks. For

example, we propose investigating their interactive ability for instruction

tuning. Can MLLMs be utilized to refine detection outputs based on human

language instructions? By providing specific instructions such as adjusting box

positions, removing redundant boxes, or correcting predicted classes, can MLLMs

adapt their predictions to meet desired expectations? Exploring these

possibilities could revolutionize computer vision tasks.

Enhancing Visual Text Generation with

GlyphControl

Article: https://arxiv.org/pdf/2305.18259.pdf

Introduction

GlyphControl

is an innovative approach that improves text-to-image generation by

incorporating glyph conditional information. It allows users to customize the

content, location, and size of the generated text. In this blog post, we

explore the advantages of GlyphControl and its superior performance compared to

existing methods.

Advantages of GlyphControl

GlyphControl

enhances the Stable-Diffusion model without requiring retraining. Users can

customize the generated text according to their needs, resulting in visually appealing

and accurate results.

The LAION-Glyph Benchmark Dataset

GlyphControl

includes the LAION-Glyph training benchmark dataset, which helps researchers

evaluate visual text generation approaches effectively.

Superior Performance

GlyphControl

outperforms the DeepFloyd IF approach in terms of OCR accuracy and CLIP scores,

demonstrating its effectiveness in generating high-quality visual text.

Future Implications

GlyphControl

opens up new possibilities in content creation, design, and advertising.

Further advancements are expected as researchers build upon GlyphControl's

foundation.

Conclusion

GlyphControl

is a powerful approach that improves text-to-image generation by leveraging

glyph conditional information. It offers customization options, performs well

compared to existing methods, and has promising implications for various

applications.

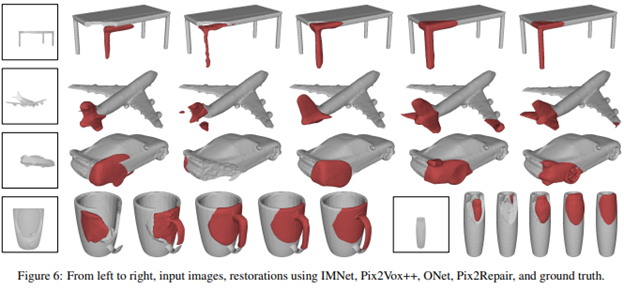

Pix2Repair: Automated Shape Repair from Images

Article: https://arxiv.org/pdf/2305.18273.pdf

Introduction:

Pix2Repair is an innovative approach that

automates shape repair by generating restoration shapes from images. This

eliminates the need for expensive 3D scanners and manual cleanup, making the

process more accessible and scalable.

Problem:

Traditional shape repair

methods rely on high-resolution 3D meshes obtained through costly 3D scanning.

This approach is time-consuming and limits accessibility.

Solution:

Pix2Repair takes an image

of a fractured object as input and generates a 3D printable restoration shape.

It utilizes a novel shape function that deconstructs a latent code representing

the object into a complete shape and a break surface.

Summary:

Pix2Repair revolutionizes

shape repair by leveraging image-based restoration techniques. It eliminates

the need for expensive 3D scanners and manual cleanup, offering a more

accessible and scalable solution. Key Contributions: Image-Based Restoration:

Pix2Repair generates restoration shapes directly from images, eliminating the

need for 3D scanning. Novel Shape Function: The proposed shape function

deconstructs a latent code into a complete shape and a break surface, enabling

accurate restoration. Dataset Applications: Successful restorations were

demonstrated for synthetic fractures and cultural heritage objects from various

datasets. Overcoming Challenges: Pix2Repair handles axially symmetric objects

by predicting view-centered restorations. Superior Performance: Pix2Repair

outperforms shape completion approaches in terms of various metrics, including

chamfer distance and normal consistency.

Conclusion:

Pix2Repair offers an

automated shape repair solution by leveraging images, removing the need for

expensive 3D scanning equipment. Its novel approach shows promising results in

restoring fractured objects, making shape repair more accessible and efficient.

This innovation has the potential to transform the field of object restoration

and benefit researchers, conservators, and restoration professionals.

Comentarii

Trimiteți un comentariu